Iteration

Design for humans, tested by humans, adapted by Avril.

Putting our work in the hands of real users to get their feedback and observe their reactions is the best way to test or validate the work done so far.

User testing can help to reveal anything we may have missed, test assumptions and find out what needs to happen next. I have conducted a number of usability tests and in each one I have been responsible for all aspects of the test, from recruiting the participants, writing the scripts and scenarios, conducting the test to analysing and writing up the findings. Findings are then fed back into the next design iteration as we to move toward the optimal solution.

My approach to user testing can vary based on budget, timescales or access to the user audience. I use the method that is appropriate, be it, lab based, remote testing or "guerilla" user tests.

Getting users to come into a lab or for a face to face interview isn’t always possible or practical, no problem, there are still ways that we can get their feedback.

Conducting usability testing remotely affords us a method of getting user feedback while the users remain in their own environment, where they would naturally use the product. This approach can be a quicker and cheaper alternative lab based testing.

These remote sessions can be either moderated or unmoderated. Using screen-sharing technology to view the users screen I can conduct the session as if I were in the room with them. Alternatively, the user can be guided through the session by a set of questions or scenarios, while they’re screen and voice are recorded. The unmoderated approach can be a good option task specific tests or in situations where we just want to see how people use certain aspects of the product, although moderated sessions can allow us to react when a user does something of particular interest.

In both types of sessions the findings are shared with the project team, including videos of participants using the site.

Once a product design has gone live it's usage and effect can be tracked through metrics.

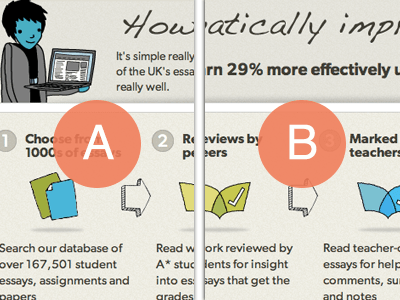

Subtle changes of wording or a picture can have a relatively big effect on the impact of the page, influencing things like bounce rates, conversions, click rates or dwell time. A/B testing allows me to assess the effectiveness of different versions of the same screen and identify future changes likely to yield positive results.